Digitizing Lloyd's Register

Historical shipping data from 1765 to present.

This is an in progress open-source project in digital humanities, in which I am seeking to reliably extract and structure the historical data in the corpus of Lloyd’s registers currently being digitized by the Lloyd's Register Foundation. The eventual goal of this is to create a dataset of all entries, with which historical research can be performed, and which can enhance larger historical records through network analysis. As this is a work in progress, the content of this page will change, and this page will track those evolutions.

The Project is written in Python, and the GitHub repository for the source code is located here

Historical Background

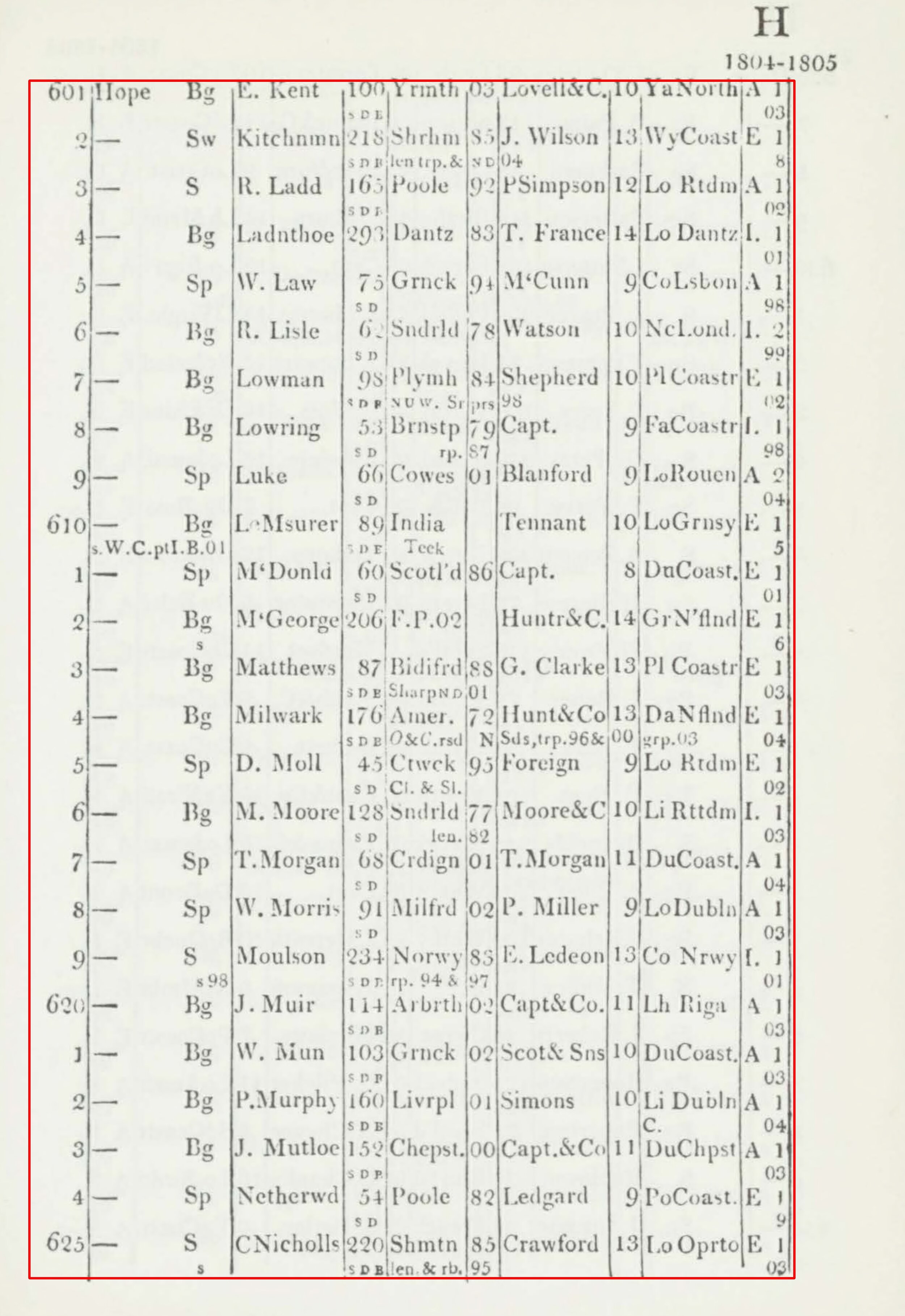

Named after the coffeehouse Lloyd's of London, Lloyd's Register is a printed record of the origins of the contemporary world. A set of yearly printed records, it chronicles the birth of modern markets in insurance, shipping, and financial speculation. Its roots go back to the 1600's, but in its present form, since 1765 until the present day it is a list of ships and owners with data such as insurable condition and normal trade routes. The Lloyd's Register foundation is itself today a nonprofit with the task of digitizing all manuscripts in their collection. The registers which this project encompasses are not the only data which Lloyds collected. as a proto [Data as a Service] organization, at various points in its 250 years of existence it has tracked shipwrecks, insurance returns and world fleet statistics.

However, the OCR which the register foundation provides for the tables is quite inaccurate. Besides missed letters, it has also scanned the letters in unstructured form. As the text of the registers is in the public domain, this seemed like an ideal introductory project to CV.

https://hec.lrfoundation.org.uk/archive-library/documents

intentions:

this project has two intentions: for me to teach myself the mechanics of text recognition and to provide for the academic community a robust well scanned dataset that encompasses every Lloyd's Register currently in the public domain. These Registers are ripe for a tailored OCR in that they

- Display data in near identical formatting for nearly 100 years

- Are in the public domain

- Have immense use to historians already

current status

So far, the project is at the point of successfully segmenting the pages into OCR-able lines and extracting the text with about 85% accuracy. The lists feature tightly packed text in a grid of varying proportions. This, combined with the small undertext and the arcane system of abbreviation has introduced difficulties rarely encountered in the common application of optical character recognition. To boost the accuracy of such recognitions, I am currently using an LSTM optical character recognition neural network. Additionally, I have written Text processing algorithms to comb the scans and repair artifacts from the scanning process. However, the next phase of this project is more complicated, as many of the registers have been annotated with handwritten notes. These parts of the historical record will require a second solution to extract meaningful text from the handwriting.

While these images have been modified, constituting fair use, any rights for all page scan remains with Lloyd’s Register.

Current fields in the dataset:

{"list_no" , "ship_name" , "master" ,"tonnage" , "home_port" , "length" , "underwriter" , "beam" , "route" , "condition" } Different fields which the algorithm finds.

oCR-ing text in python

So far, I have developed a relatively robust algorithm that segments the page and then divides the text box into fields.

Many of these pages are distorted due to age. For this reason, the scripts first use a [Probabilistic Hough Lines Transform] to establish the general location of the text fields. It then deskews the text. This completed, it uses the vertical lines and the weight of the text boxes to segment the now straightened rectangular text into bounding boxes. Many notes have been added to the bottom of entries in a smaller font. These notes are tracked and logged to the requisite data fields by their location and text size. As such the script is now able to derive information from 85% of entries. However, it is still vulnerable to edge cases and handwritten notes.